BonsaiDb Commerce Benchmark

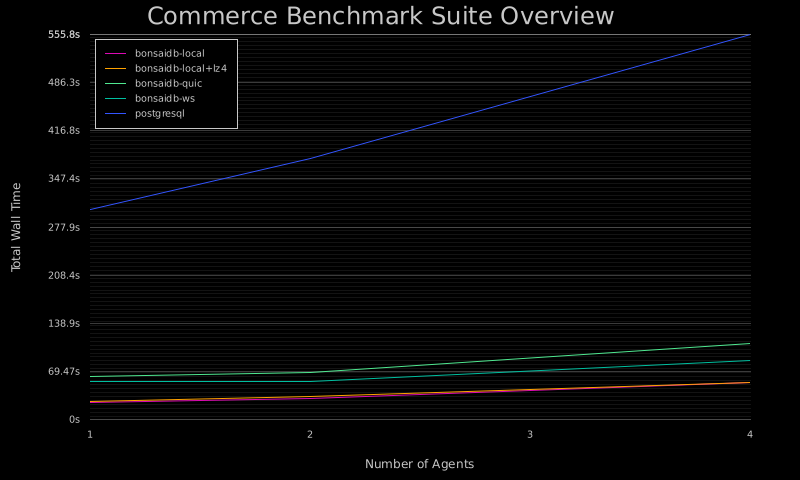

This benchmark suite is designed to simulate the types of loads that an ecommerce application might see under different levels of concurrency and traffic patterns. As with all benchmark suites, the results should not be taken as proof that any datbase may or may not perform for any particular application. Each application's needs differ greatly, and this benchmark is designed at helping BonsaiDb's developers notice areas for improvement.

| Dataset Size | Traffic Pattern | Concurrency | bonsaidb-local | bonsaidb-local+lz4 | bonsaidb-quic | bonsaidb-ws | postgresql | Report |

|---|---|---|---|---|---|---|---|---|

| small | balanced | 1 | 1.508s | 1.237s | 4.386s | 3.675s | 7.289s | Full Report |

| small | balanced | 2 | 1.429s | 1.475s | 4.642s | 3.404s | 9.188s | Full Report |

| small | balanced | 4 | 2.035s | 2.178s | 7.567s | 5.570s | 15.79s | Full Report |

| small | readheavy | 1 | 790.1ms | 826.6ms | 3.305s | 2.944s | 4.828s | Full Report |

| small | readheavy | 2 | 889.6ms | 848.4ms | 3.377s | 2.336s | 6.385s | Full Report |

| small | readheavy | 4 | 1.302s | 1.338s | 5.526s | 4.176s | 10.53s | Full Report |

| small | writeheavy | 1 | 5.443s | 6.250s | 12.54s | 11.08s | 32.19s | Full Report |

| small | writeheavy | 2 | 6.702s | 7.741s | 14.58s | 11.68s | 49.77s | Full Report |

| small | writeheavy | 4 | 14.64s | 14.17s | 22.84s | 18.13s | 86.28s | Full Report |

| medium | balanced | 1 | 1.491s | 1.552s | 4.734s | 4.234s | 14.96s | Full Report |

| medium | balanced | 2 | 1.977s | 1.908s | 4.849s | 4.153s | 18.13s | Full Report |

| medium | balanced | 4 | 2.546s | 2.538s | 8.099s | 6.211s | 23.69s | Full Report |

| medium | readheavy | 1 | 866.0ms | 943.8ms | 3.509s | 2.965s | 12.15s | Full Report |

| medium | readheavy | 2 | 1.159s | 1.277s | 3.699s | 2.597s | 14.77s | Full Report |

| medium | readheavy | 4 | 1.742s | 1.970s | 5.907s | 4.390s | 17.12s | Full Report |

| medium | writeheavy | 1 | 5.785s | 5.780s | 12.35s | 10.80s | 44.56s | Full Report |

| medium | writeheavy | 2 | 7.875s | 7.502s | 13.48s | 11.08s | 59.60s | Full Report |

| medium | writeheavy | 4 | 11.91s | 12.02s | 21.30s | 17.15s | 107.7s | Full Report |

| large | balanced | 1 | 1.973s | 2.133s | 5.372s | 4.448s | 51.47s | Full Report |

| large | balanced | 2 | 2.182s | 2.299s | 5.292s | 4.274s | 52.73s | Full Report |

| large | balanced | 4 | 3.067s | 3.019s | 8.226s | 6.389s | 60.96s | Full Report |

| large | readheavy | 1 | 1.421s | 1.436s | 3.830s | 3.261s | 48.38s | Full Report |

| large | readheavy | 2 | 1.510s | 1.562s | 3.988s | 3.067s | 50.46s | Full Report |

| large | readheavy | 4 | 2.231s | 2.191s | 6.824s | 5.106s | 59.43s | Full Report |

| large | writeheavy | 1 | 6.215s | 6.640s | 12.87s | 11.69s | 86.77s | Full Report |

| large | writeheavy | 2 | 7.916s | 8.571s | 14.83s | 12.14s | 115.5s | Full Report |

| large | writeheavy | 4 | 14.11s | 14.79s | 23.37s | 19.17s | 174.3s | Full Report |

Dataset Sizes

The three dataset sizes are named "small", "medium", and "large". All databases being benchmarked can handle much larger dataset sizes than "large", but it is impractical at this time to run larger benchmarks on a regular basis. Each run's individual page will show the initial data set breakdown by type.

Traffic Patterns

This suite uses a probability-based system to generate plans for agents to process concurrently. These plans operate in a "funnel" pattern of searching, adding to cart, checking out, and reviewing the purchased items. Each stage in this funnel is assigned a probabilty, and these probabilities are tweaked to simulate read-heavy traffic patterns that perform more searches than purchasing, write-heavy traffic patterns where most plans result in purchasing and reviewing the products, and a balanced traffic pattern that is meant to simulate moderate amount of write traffic.

Concurrency

The suite is configured to run the plans up to three times, depending on the number of CPU cores present: 1 agent, 1 agent per core, and 2 agents per core.